Learning the Data Acquisition

Can we determine the most informative datasets—whether human-interpretable or not—that provide the richest signals for AI-driven insights?

The prevailing approach to AI in healthcare primarily relies on retrospectively collected datasets—such as medical images and electronic health records (EHRs)—to train machine learning models that mimic clinical inference. While this method seems reasonable, it is fundamentally suboptimal, as evidenced by the limited adoption of AI in real-world clinical practice.

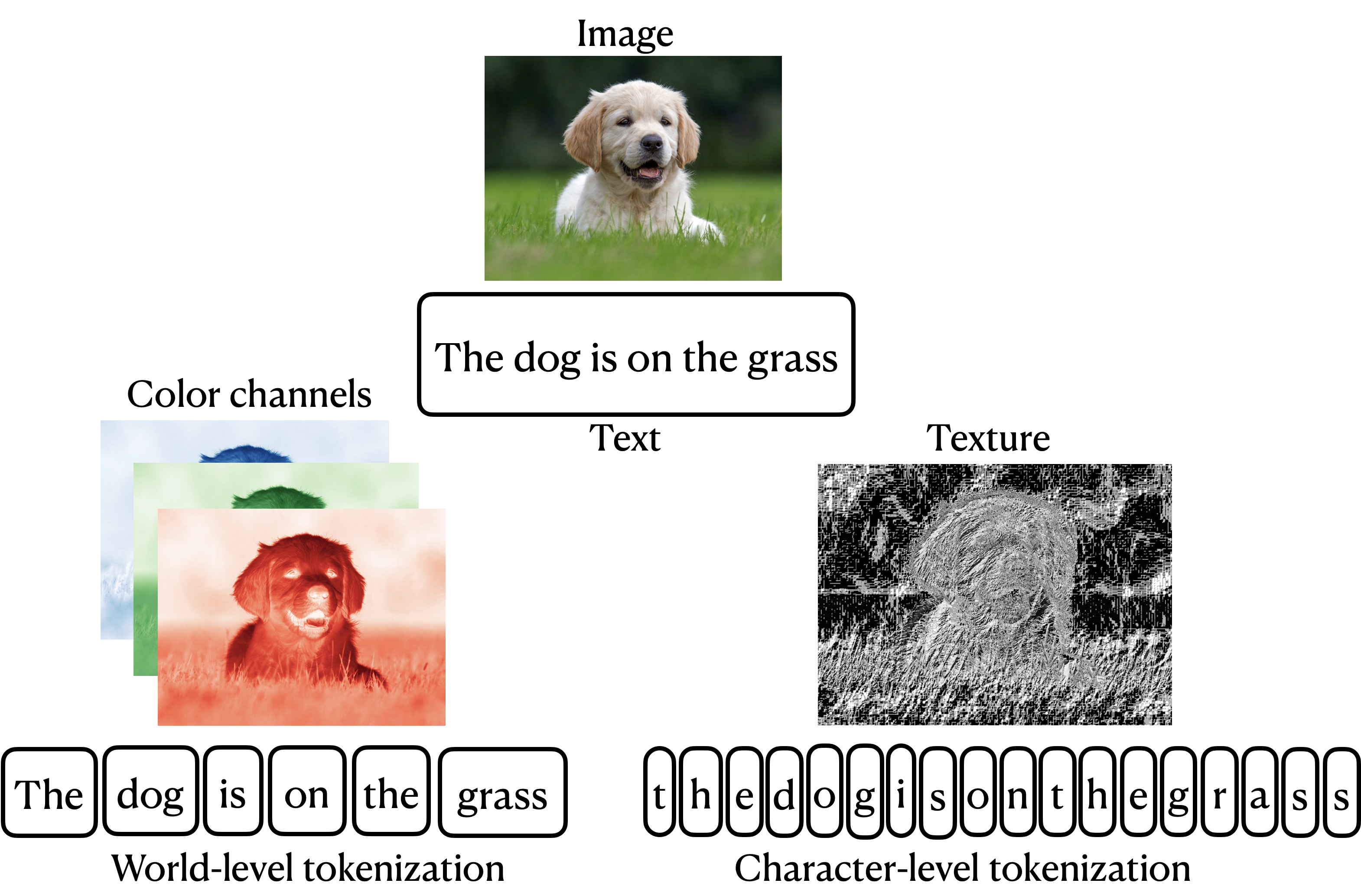

One major limitation stems from the nature of healthcare datasets: they are designed for human interpretability, meaning they are structured to ensure clinicians can detect abnormalities. However, machine learning models are not bound by this constraint—why limit them to the same data types that humans rely on? Moreover, human perception itself is inherently limited. What hidden patterns or signals exist within these datasets that we are currently overlooking?

With these challenges in mind, my research lab focuses on two key themes:

Can we determine the most informative datasets—whether human-interpretable or not—that provide the richest signals for AI-driven insights?

Can we detect hidden signals within existing datasets to reveal previously unnoticed patterns and observations?

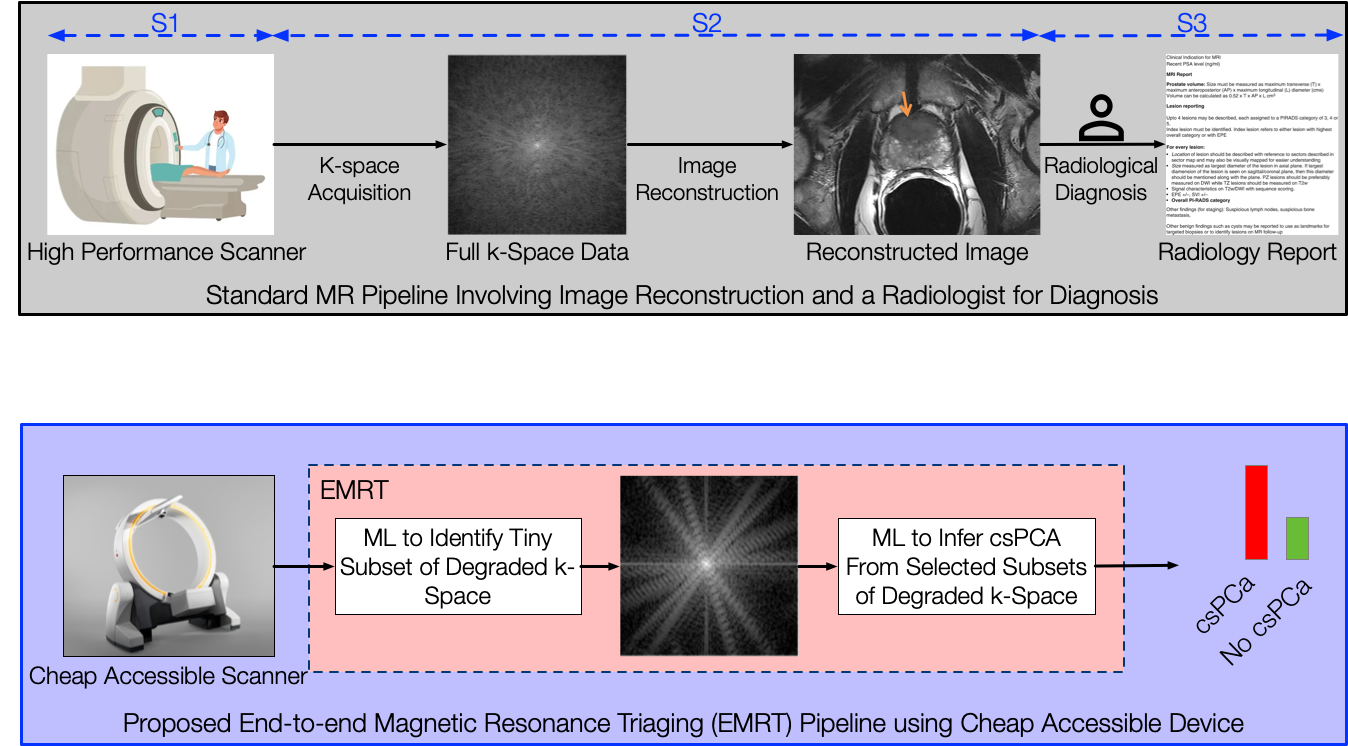

Using AI to enable MR-based diagnostics (a highly accurate but expensive and inaccessible technology) for early detection of diseases at population-level, thereby democratizing access to this advanced diagnostic modality. We accomplish this by learning disease signatures in the raw frequency space (a.k.a., k-space) without the need to reconstruct high-fidelity images.

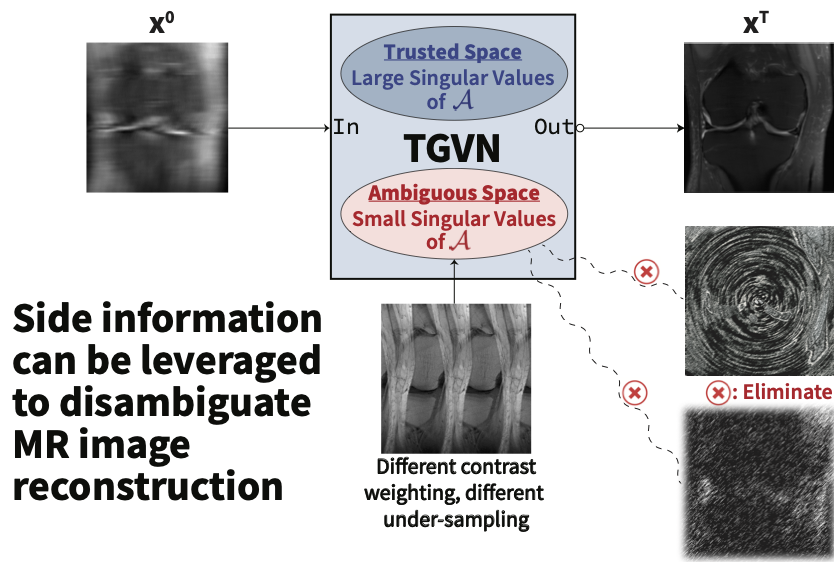

Envision MRI scanners equipped with “patient-specific memory,” capable of recalling and leveraging multiple sources of prior data (e.g., prior imaging, EHR) from the same individual—rather than relying solely on the current scan. Freed from the requirement to collect measurements near the Nyquist rate, these scanners can dramatically reduce scan times without sacrificing image quality, even on lower-cost machines, enabling accessible imaging.

Traditional approaches to multi-modal learning are sub-optimal because they predominently concentrated on capturing in isolation either the inter-modality dependencies or the intra-modality dependencies. Viewing this problem from the lens of generative models, we consider the target as a source of multiple modalities and the interaction between them, and propose the I2M2 framework, that naturally captures both inter- and intra-modality dependencies, leading to more accurate predictions.

An MR scanner captures a vast array of high-quality k-space measurements to generate detailed cross-sectional images. However, this process is inherently slow and expensive, as the amount of data collected remains constant regardless of patient characteristics or the suspected disease. We propose a method that learns an adaptive policy to selectively acquire k-space measurements, optimizing for disease detection without the need for image reconstruction.

AI in radiology is revolutionizing more than just medical image analysis—it’s transforming the entire radiological ecosystem. From optimizing workflows to enhancing training, its potential is limitless. We are pioneering the world’s first AI-driven platform designed to deliver a truly personalized educational experience for radiology residents. Powered by advanced Large Language Models (LLMs), our adaptive system tailors learning to each resident’s unique journey, analyzing their past case exposure, strengths, and areas for improvement. By leveraging AI-driven insights, we are redefining how radiologists learn, grow, and excel in their field.